#search engine bias

Explore tagged Tumblr posts

Text

FBI to Investigate Why Biden’s Events Keep Showing Up in Google Searches

Google Glitches: Why Biden’s Campaign Keeps Haunting Search Results After He’s Left the Race Kamala Who? The Search Engine That Refuses to Move On Silicon Valley, CA — In an era where technology is supposed to keep us more informed than ever, one might assume that even the most basic facts are easy to find. Yet, for reasons that continue to baffle experts and voters alike, Google—the omnipresent…

#2024 election#algorithm confusion#Biden campaign#Biden vs. Trump#campaign search#digital ghost#digital immortality#election satire#FBI investigation#Google glitch#Harris campaign#internet glitch.#Kamala Harris#online visibility#political humor#political mix-up#political search results#search engine bias#search engine error#SEO problems

0 notes

Text

Ok so in Bia, they don't have google, they have "HOCUS"

#i love dcla and d+la shows and their made up search engines#siempre fui yo had something just called 'buscar'#sara gives bia a second chance

5 notes

·

View notes

Text

New Research Finds Sixteen Major Problems With RAG Systems, Including Perplexity

New Post has been published on https://thedigitalinsider.com/new-research-finds-sixteen-major-problems-with-rag-systems-including-perplexity/

New Research Finds Sixteen Major Problems With RAG Systems, Including Perplexity

A recent study from the US has found that the real-world performance of popular Retrieval Augmented Generation (RAG) research systems such as Perplexity and Bing Copilot falls far short of both the marketing hype and popular adoption that has garnered headlines over the last 12 months.

The project, which involved extensive survey participation featuring 21 expert voices, found no less than 16 areas in which the studied RAG systems (You Chat, Bing Copilot and Perplexity) produced cause for concern:

1: A lack of objective detail in the generated answers, with generic summaries and scant contextual depth or nuance.

2. Reinforcement of perceived user bias, where a RAG engine frequently fails to present a range of viewpoints, but instead infers and reinforces user bias, based on the way that the user phrases a question.

3. Overly confident language, particularly in subjective responses that cannot be empirically established, which can lead users to trust the answer more than it deserves.

4: Simplistic language and a lack of critical thinking and creativity, where responses effectively patronize the user with ‘dumbed-down’ and ‘agreeable’ information, instead of thought-through cogitation and analysis.

5: Misattributing and mis-citing sources, where the answer engine uses cited sources that do not support its response/s, fostering the illusion of credibility.

6: Cherry-picking information from inferred context, where the RAG agent appears to be seeking answers that support its generated contention and its estimation of what the user wants to hear, instead of basing its answers on objective analysis of reliable sources (possibly indicating a conflict between the system’s ‘baked’ LLM data and the data that it obtains on-the-fly from the internet in response to a query).

7: Omitting citations that support statements, where source material for responses is absent.

8: Providing no logical schema for its responses, where users cannot question why the system prioritized certain sources over other sources.

9: Limited number of sources, where most RAG systems typically provide around three supporting sources for a statement, even where a greater diversity of sources would be applicable.

10: Orphaned sources, where data from all or some of the system’s supporting citations is not actually included in the answer.

11: Use of unreliable sources, where the system appears to have preferred a source that is popular (i.e., in SEO terms) rather than factually correct.

12: Redundant sources, where the system presents multiple citations in which the source papers are essentially the same in content.

13: Unfiltered sources, where the system offers the user no way to evaluate or filter the offered citations, forcing users to take the selection criteria on trust.

14: Lack of interactivity or explorability, wherein several of the user-study participants were frustrated that RAG systems did not ask clarifying questions, but assumed user-intent from the first query.

15: The need for external verification, where users feel compelled to perform independent verification of the supplied response/s, largely removing the supposed convenience of RAG as a ‘replacement for search’.

16: Use of academic citation methods, such as [1] or [34]; this is standard practice in scholarly circles, but can be unintuitive for many users.

For the work, the researchers assembled 21 experts in artificial intelligence, healthcare and medicine, applied sciences and education and social sciences, all either post-doctoral researchers or PhD candidates. The participants interacted with the tested RAG systems whilst speaking their thought processes out loud, to clarify (for the researchers) their own rational schema.

The paper extensively quotes the participants’ misgivings and concerns about the performance of the three systems studied.

The methodology of the user-study was then systematized into an automated study of the RAG systems, using browser control suites:

‘A large-scale automated evaluation of systems like You.com, Perplexity.ai, and BingChat showed that none met acceptable performance across most metrics, including critical aspects related to handling hallucinations, unsupported statements, and citation accuracy.’

The authors argue at length (and assiduously, in the comprehensive 27-page paper) that both new and experienced users should exercise caution when using the class of RAG systems studied. They further propose a new system of metrics, based on the shortcomings found in the study, that could form the foundation of greater technical oversight in the future.

However, the growing public usage of RAG systems prompts the authors also to advocate for apposite legislation and a greater level of enforceable governmental policy in regard to agent-aided AI search interfaces.

The study comes from five researchers across Pennsylvania State University and Salesforce, and is titled Search Engines in an AI Era: The False Promise of Factual and Verifiable Source-Cited Responses. The work covers RAG systems up to the state of the art in August of 2024

The RAG Trade-Off

The authors preface their work by reiterating four known shortcomings of Large Language Models (LLMs) where they are used within Answer Engines.

Firstly, they are prone to hallucinate information, and lack the capability to detect factual inconsistencies. Secondly, they have difficulty assessing the accuracy of a citation in the context of a generated answer. Thirdly, they tend to favor data from their own pre-trained weights, and may resist data from externally retrieved documentation, even though such data may be more recent or more accurate.

Finally, RAG systems tend towards people-pleasing, sycophantic behavior, often at the expense of accuracy of information in their responses.

All these tendencies were confirmed in both aspects of the study, among many novel observations about the pitfalls of RAG.

The paper views OpenAI’s SearchGPT RAG product (released to subscribers last week, after the new paper was submitted), as likely to to encourage the user-adoption of RAG-based search systems, in spite of the foundational shortcomings that the survey results hint at*:

‘The release of OpenAI’s ‘SearchGPT,’ marketed as a ‘Google search killer’, further exacerbates [concerns]. As reliance on these tools grows, so does the urgency to understand their impact. Lindemann introduces the concept of Sealed Knowledge, which critiques how these systems limit access to diverse answers by condensing search queries into singular, authoritative responses, effectively decontextualizing information and narrowing user perspectives.

‘This “sealing” of knowledge perpetuates selection biases and restricts marginalized viewpoints.’

The Study

The authors first tested their study procedure on three out of 24 selected participants, all invited by means such as LinkedIn or email.

The first stage, for the remaining 21, involved Expertise Information Retrieval, where participants averaged around six search enquiries over a 40-minute session. This section concentrated on the gleaning and verification of fact-based questions and answers, with potential empirical solutions.

The second phase concerned Debate Information Retrieval, which dealt instead with subjective matters, including ecology, vegetarianism and politics.

Generated study answers from Perplexity (left) and You Chat (right). Source: https://arxiv.org/pdf/2410.22349

Since all of the systems allowed at least some level of interactivity with the citations provided as support for the generated answers, the study subjects were encouraged to interact with the interface as much as possible.

In both cases, the participants were asked to formulate their enquiries both through a RAG system and a conventional search engine (in this case, Google).

The three Answer Engines – You Chat, Bing Copilot, and Perplexity – were chosen because they are publicly accessible.

The majority of the participants were already users of RAG systems, at varying frequencies.

Due to space constraints, we cannot break down each of the exhaustively-documented sixteen key shortcomings found in the study, but here present a selection of some of the most interesting and enlightening examples.

Lack of Objective Detail

The paper notes that users found the systems’ responses frequently lacked objective detail, across both the factual and subjective responses. One commented:

‘It was just trying to answer without actually giving me a solid answer or a more thought-out answer, which I am able to get with multiple Google searches.’

Another observed:

‘It’s too short and just summarizes everything a lot. [The model] needs to give me more data for the claim, but it’s very summarized.’

Lack of Holistic Viewpoint

The authors express concern about this lack of nuance and specificity, and state that the Answer Engines frequently failed to present multiple perspectives on any argument, tending to side with a perceived bias inferred from the user’s own phrasing of the question.

One participant said:

‘I want to find out more about the flip side of the argument… this is all with a pinch of salt because we don’t know the other side and the evidence and facts.’

Another commented:

‘It is not giving you both sides of the argument; it’s not arguing with you. Instead, [the model] is just telling you, ’you’re right… and here are the reasons why.’

Confident Language

The authors observe that all three tested systems exhibited the use of over-confident language, even for responses that cover subjective matters. They contend that this tone will tend to inspire unjustified confidence in the response.

A participant noted:

‘It writes so confidently, I feel convinced without even looking at the source. But when you look at the source, it’s bad and that makes me question it again.’

Another commented:

‘If someone doesn’t exactly know the right answer, they will trust this even when it is wrong.’

Incorrect Citations

Another frequent problem was misattribution of sources cited as authority for the RAG systems’ responses, with one of the study subjects asserting:

‘[This] statement doesn’t seem to be in the source. I mean the statement is true; it’s valid… but I don’t know where it’s even getting this information from.’

The new paper’s authors comment †:

‘Participants felt that the systems were using citations to legitimize their answer, creating an illusion of credibility. This facade was only revealed to a few users who proceeded to scrutinize the sources.’

Cherrypicking Information to Suit the Query

Returning to the notion of people-pleasing, sycophantic behavior in RAG responses, the study found that many answers highlighted a particular point-of-view instead of comprehensively summarizing the topic, as one participant observed:

‘I feel [the system] is manipulative. It takes only some information and it feels I am manipulated to only see one side of things.’

Another opined:

‘[The source] actually has both pros and cons, and it’s chosen to pick just the sort of required arguments from this link without the whole picture.’

For further in-depth examples (and multiple critical quotes from the survey participants), we refer the reader to the source paper.

Automated RAG

In the second phase of the broader study, the researchers used browser-based scripting to systematically solicit enquiries from the three studied RAG engines. They then used an LLM system (GPT-4o) to analyze the systems’ responses.

The statements were analyzed for query relevance and Pro vs. Con Statements (i.e., whether the response is for, against, or neutral, in regard to the implicit bias of the query.

An Answer Confidence Score was also evaluated in this automated phase, based on the Likert scale psychometric testing method. Here the LLM judge was augmented by two human annotators.

A third operation involved the use of web-scraping to obtain the full-text content of cited web-pages, through the Jina.ai Reader tool. However, as noted elsewhere in the paper, most web-scraping tools are no more able to access paywalled sites than most people are (though the authors observe that Perplexity.ai has been known to bypass this barrier).

Additional considerations were whether or not the answers cited a source (computed as a ‘citation matrix’), as well as a ‘factual support matrix’ – a metric verified with the help of four human annotators.

Thus 8 overarching metrics were obtained: one-sided answer; overconfident answer; relevant statement; uncited sources; unsupported statements; source necessity; citation accuracy; and citation thoroughness.

The material against which these metrics were tested consisted of 303 curated questions from the user-study phase, resulting in 909 answers across the three tested systems.

Quantitative evaluation across the three tested RAG systems, based on eight metrics.

Regarding the results, the paper states:

‘Looking at the three metrics relating to the answer text, we find that evaluated answer engines all frequently (50-80%) generate one-sided answers, favoring agreement with a charged formulation of a debate question over presenting multiple perspectives in the answer, with Perplexity performing worse than the other two engines.

‘This finding adheres with [the findings] of our qualitative results. Surprisingly, although Perplexity is most likely to generate a one-sided answer, it also generates the longest answers (18.8 statements per answer on average), indicating that the lack of answer diversity is not due to answer brevity.

‘In other words, increasing answer length does not necessarily improve answer diversity.’

The authors also note that Perplexity is most likely to use confident language (90% of answers), and that, by contrast, the other two systems tend to use more cautious and less confident language where subjective content is at play.

You Chat was the only RAG framework to achieve zero uncited sources for an answer, with Perplexity at 8% and Bing Chat at 36%.

All models evidenced a ‘significant proportion’ of unsupported statements, and the paper declares†:

‘The RAG framework is advertised to solve the hallucinatory behavior of LLMs by enforcing that an LLM generates an answer grounded in source documents, yet the results show that RAG-based answer engines still generate answers containing a large proportion of statements unsupported by the sources they provide.‘

Additionally, all the tested systems had difficulty in supporting their statements with citations:

‘You.Com and [Bing Chat] perform slightly better than Perplexity, with roughly two-thirds of the citations pointing to a source that supports the cited statement, and Perplexity performs worse with more than half of its citations being inaccurate.

‘This result is surprising: citation is not only incorrect for statements that are not supported by any (source), but we find that even when there exists a source that supports a statement, all engines still frequently cite a different incorrect source, missing the opportunity to provide correct information sourcing to the user.

‘In other words, hallucinatory behavior is not only exhibited in statements that are unsupported by the sources but also in inaccurate citations that prohibit users from verifying information validity.‘

The authors conclude:

‘None of the answer engines achieve good performance on a majority of the metrics, highlighting the large room for improvement in answer engines.’

* My conversion of the authors’ inline citations to hyperlinks. Where necessary, I have chosen the first of multiple citations for the hyperlink, due to formatting practicalities.

† Authors’ emphasis, not mine.

First published Monday, November 4, 2024

#2024#adoption#agent#agreement#ai#AI search#Analysis#answer engine#Art#artificial#Artificial Intelligence#barrier#Behavior#Bias#bing#browser#circles#comprehensive#Conflict#content#creativity#data#diversity#documentation#Ecology#education#email#emphasis#engine#engines

0 notes

Text

Reasons Why AI is Not Perfect for Website Ranking

Artificial Intelligence (AI) has undoubtedly revolutionized various aspects of our lives, including website ranking and search engine optimization (SEO). With its ability to analyze vast amounts of data and make informed decisions, AI has become an integral part of search engine algorithms. However, despite its numerous advantages, AI is not flawless and has its limitations when it comes to…

View On WordPress

#Accuracy of AI algorithms#AI algorithms#AI and search engine optimization (SEO)#AI bias#AI drawbacks in website ranking#AI in digital marketing#AI in web development#AI limitations#AI vs. human judgement#Contextual understanding#Fairness in website ranking#Holistic approach in website ranking#Human expertise#Manipulation vulnerability#Website ranking

0 notes

Text

Conspiratorialism as a material phenomenon

I'll be in TUCSON, AZ from November 8-10: I'm the GUEST OF HONOR at the TUSCON SCIENCE FICTION CONVENTION.

I think it behooves us to be a little skeptical of stories about AI driving people to believe wrong things and commit ugly actions. Not that I like the AI slop that is filling up our social media, but when we look at the ways that AI is harming us, slop is pretty low on the list.

The real AI harms come from the actual things that AI companies sell AI to do. There's the AI gun-detector gadgets that the credulous Mayor Eric Adams put in NYC subways, which led to 2,749 invasive searches and turned up zero guns:

https://www.cbsnews.com/newyork/news/nycs-subway-weapons-detector-pilot-program-ends/

Any time AI is used to predict crime – predictive policing, bail determinations, Child Protective Services red flags – they magnify the biases already present in these systems, and, even worse, they give this bias the veneer of scientific neutrality. This process is called "empiricism-washing," and you know you're experiencing it when you hear some variation on "it's just math, math can't be racist":

https://pluralistic.net/2020/06/23/cryptocidal-maniacs/#phrenology

When AI is used to replace customer service representatives, it systematically defrauds customers, while providing an "accountability sink" that allows the company to disclaim responsibility for the thefts:

https://pluralistic.net/2024/04/23/maximal-plausibility/#reverse-centaurs

When AI is used to perform high-velocity "decision support" that is supposed to inform a "human in the loop," it quickly overwhelms its human overseer, who takes on the role of "moral crumple zone," pressing the "OK" button as fast as they can. This is bad enough when the sacrificial victim is a human overseeing, say, proctoring software that accuses remote students of cheating on their tests:

https://pluralistic.net/2022/02/16/unauthorized-paper/#cheating-anticheat

But it's potentially lethal when the AI is a transcription engine that doctors have to use to feed notes to a data-hungry electronic health record system that is optimized to commit health insurance fraud by seeking out pretenses to "upcode" a patient's treatment. Those AIs are prone to inventing things the doctor never said, inserting them into the record that the doctor is supposed to review, but remember, the only reason the AI is there at all is that the doctor is being asked to do so much paperwork that they don't have time to treat their patients:

https://apnews.com/article/ai-artificial-intelligence-health-business-90020cdf5fa16c79ca2e5b6c4c9bbb14

My point is that "worrying about AI" is a zero-sum game. When we train our fire on the stuff that isn't important to the AI stock swindlers' business-plans (like creating AI slop), we should remember that the AI companies could halt all of that activity and not lose a dime in revenue. By contrast, when we focus on AI applications that do the most direct harm – policing, health, security, customer service – we also focus on the AI applications that make the most money and drive the most investment.

AI hasn't attracted hundreds of billions in investment capital because investors love AI slop. All the money pouring into the system – from investors, from customers, from easily gulled big-city mayors – is chasing things that AI is objectively very bad at and those things also cause much more harm than AI slop. If you want to be a good AI critic, you should devote the majority of your focus to these applications. Sure, they're not as visually arresting, but discrediting them is financially arresting, and that's what really matters.

All that said: AI slop is real, there is a lot of it, and just because it doesn't warrant priority over the stuff AI companies actually sell, it still has cultural significance and is worth considering.

AI slop has turned Facebook into an anaerobic lagoon of botshit, just the laziest, grossest engagement bait, much of it the product of rise-and-grind spammers who avidly consume get rich quick "courses" and then churn out a torrent of "shrimp Jesus" and fake chainsaw sculptures:

https://www.404media.co/email/1cdf7620-2e2f-4450-9cd9-e041f4f0c27f/

For poor engagement farmers in the global south chasing the fractional pennies that Facebook shells out for successful clickbait, the actual content of the slop is beside the point. These spammers aren't necessarily tuned into the psyche of the wealthy-world Facebook users who represent Meta's top monetization subjects. They're just trying everything and doubling down on anything that moves the needle, A/B splitting their way into weird, hyper-optimized, grotesque crap:

https://www.404media.co/facebook-is-being-overrun-with-stolen-ai-generated-images-that-people-think-are-real/

In other words, Facebook's AI spammers are laying out a banquet of arbitrary possibilities, like the letters on a Ouija board, and the Facebook users' clicks and engagement are a collective ideomotor response, moving the algorithm's planchette to the options that tug hardest at our collective delights (or, more often, disgusts).

So, rather than thinking of AI spammers as creating the ideological and aesthetic trends that drive millions of confused Facebook users into condemning, praising, and arguing about surreal botshit, it's more true to say that spammers are discovering these trends within their subjects' collective yearnings and terrors, and then refining them by exploring endlessly ramified variations in search of unsuspected niches.

(If you know anything about AI, this may remind you of something: a Generative Adversarial Network, in which one bot creates variations on a theme, and another bot ranks how closely the variations approach some ideal. In this case, the spammers are the generators and the Facebook users they evince reactions from are the discriminators)

https://en.wikipedia.org/wiki/Generative_adversarial_network

I got to thinking about this today while reading User Mag, Taylor Lorenz's superb newsletter, and her reporting on a new AI slop trend, "My neighbor’s ridiculous reason for egging my car":

https://www.usermag.co/p/my-neighbors-ridiculous-reason-for

The "egging my car" slop consists of endless variations on a story in which the poster (generally a figure of sympathy, canonically a single mother of newborn twins) complains that her awful neighbor threw dozens of eggs at her car to punish her for parking in a way that blocked his elaborate Hallowe'en display. The text is accompanied by an AI-generated image showing a modest family car that has been absolutely plastered with broken eggs, dozens upon dozens of them.

According to Lorenz, variations on this slop are topping very large Facebook discussion forums totalling millions of users, like "Movie Character…,USA Story, Volleyball Women, Top Trends, Love Style, and God Bless." These posts link to SEO sites laden with programmatic advertising.

The funnel goes:

i. Create outrage and hence broad reach;

ii, A small percentage of those who see the post will click through to the SEO site;

iii. A small fraction of those users will click a low-quality ad;

iv. The ad will pay homeopathic sub-pennies to the spammer.

The revenue per user on this kind of scam is next to nothing, so it only works if it can get very broad reach, which is why the spam is so designed for engagement maximization. The more discussion a post generates, the more users Facebook recommends it to.

These are very effective engagement bait. Almost all AI slop gets some free engagement in the form of arguments between users who don't know they're commenting an AI scam and people hectoring them for falling for the scam. This is like the free square in the middle of a bingo card.

Beyond that, there's multivalent outrage: some users are furious about food wastage; others about the poor, victimized "mother" (some users are furious about both). Not only do users get to voice their fury at both of these imaginary sins, they can also argue with one another about whether, say, food wastage even matters when compared to the petty-minded aggression of the "perpetrator." These discussions also offer lots of opportunity for violent fantasies about the bad guy getting a comeuppance, offers to travel to the imaginary AI-generated suburb to dole out a beating, etc. All in all, the spammers behind this tedious fiction have really figured out how to rope in all kinds of users' attention.

Of course, the spammers don't get much from this. There isn't such a thing as an "attention economy." You can't use attention as a unit of account, a medium of exchange or a store of value. Attention – like everything else that you can't build an economy upon, such as cryptocurrency – must be converted to money before it has economic significance. Hence that tooth-achingly trite high-tech neologism, "monetization."

The monetization of attention is very poor, but AI is heavily subsidized or even free (for now), so the largest venture capital and private equity funds in the world are spending billions in public pension money and rich peoples' savings into CO2 plumes, GPUs, and botshit so that a bunch of hustle-culture weirdos in the Pacific Rim can make a few dollars by tricking people into clicking through engagement bait slop – twice.

The slop isn't the point of this, but the slop does have the useful function of making the collective ideomotor response visible and thus providing a peek into our hopes and fears. What does the "egging my car" slop say about the things that we're thinking about?

Lorenz cites Jamie Cohen, a media scholar at CUNY Queens, who points out that subtext of this slop is "fear and distrust in people about their neighbors." Cohen predicts that "the next trend, is going to be stranger and more violent.”

This feels right to me. The corollary of mistrusting your neighbors, of course, is trusting only yourself and your family. Or, as Margaret Thatcher liked to say, "There is no such thing as society. There are individual men and women and there are families."

We are living in the tail end of a 40 year experiment in structuring our world as though "there is no such thing as society." We've gutted our welfare net, shut down or privatized public services, all but abolished solidaristic institutions like unions.

This isn't mere aesthetics: an atomized society is far more hospitable to extreme wealth inequality than one in which we are all in it together. When your power comes from being a "wise consumer" who "votes with your wallet," then all you can do about the climate emergency is buy a different kind of car – you can't build the public transit system that will make cars obsolete.

When you "vote with your wallet" all you can do about animal cruelty and habitat loss is eat less meat. When you "vote with your wallet" all you can do about high drug prices is "shop around for a bargain." When you vote with your wallet, all you can do when your bank forecloses on your home is "choose your next lender more carefully."

Most importantly, when you vote with your wallet, you cast a ballot in an election that the people with the thickest wallets always win. No wonder those people have spent so long teaching us that we can't trust our neighbors, that there is no such thing as society, that we can't have nice things. That there is no alternative.

The commercial surveillance industry really wants you to believe that they're good at convincing people of things, because that's a good way to sell advertising. But claims of mind-control are pretty goddamned improbable – everyone who ever claimed to have managed the trick was lying, from Rasputin to MK-ULTRA:

https://pluralistic.net/HowToDestroySurveillanceCapitalism

Rather than seeing these platforms as convincing people of things, we should understand them as discovering and reinforcing the ideology that people have been driven to by material conditions. Platforms like Facebook show us to one another, let us form groups that can imperfectly fill in for the solidarity we're desperate for after 40 years of "no such thing as society."

The most interesting thing about "egging my car" slop is that it reveals that so many of us are convinced of two contradictory things: first, that everyone else is a monster who will turn on you for the pettiest of reasons; and second, that we're all the kind of people who would stick up for the victims of those monsters.

Tor Books as just published two new, free LITTLE BROTHER stories: VIGILANT, about creepy surveillance in distance education; and SPILL, about oil pipelines and indigenous landback.

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2024/10/29/hobbesian-slop/#cui-bono

Image: Cryteria (modified) https://commons.wikimedia.org/wiki/File:HAL9000.svg

CC BY 3.0 https://creativecommons.org/licenses/by/3.0/deed.en

#pluralistic#taylor lorenz#conspiratorialism#conspiracy fantasy#mind control#a paradise built in hell#solnit#ai slop#ai#disinformation#materialism#doppelganger#naomi klein

300 notes

·

View notes

Text

Survivability Bias Pt 3

Masterpost

Content warning: This chapter involves depiction of a train derailment and subsequent fire throughout. There is also brief mention of death. I will be putting a brief summary in the description if you prefer not to read this part.

Danny jolts up from his fitful sleep. He’s intangible and invisible before he’s even fully sitting up and he’s in the air before he registers the loud boom that woke him. Any concerns about his bright transformation are made totally irrelevant by the warning sirens blaring in his head.

Wait, no. Those are real sirens.

In the distance, lights are now accompanying the sirens; flashing as they speed down what looks like main street. It’s pretty clear where they’re going too, from the violent orange glow cascading over the tops of the nearby buildings.

I knew it, Danny thinks, flying towards whatever disaster is unfolding. probably it’s stupid to get involved, when he still knows so little about this place, but- well, old habits die hard. It doesn’t take long for the problem to become obvious, and Danny freezes as he struggles to process the scene before him.

The metal carnage is nothing like Danny’s ever seen before; what looks to be a freight train has derailed at the worst possible location, sending its cars careening into the various apartment buildings and stores on the east side of town, and to make matters worse, one of them has clearly crashed straight into the gas station by the freeway, and fire is spreading faster than Danny could have imagined.

Danny can already see two buildings blazing, but he quickly focuses his attention towards the carnage of the train itself. Luckily it’s fairly obvious what direction it was headed, and Danny moves fast, looking for the engine. In ghost form, physical sensations always feel a little more distant but even through that, Danny can feel his heart rabbiting in his chest. Luckily it takes less than a minute to find the engine, but as he approaches it, the presence of death catches in his throat, and he immediately knows it’s a lost cause.

He can’t sense any actual ghosts, though, so instead Danny whips around to stare at the derailed cars. He’s far more used to fighting than he is rescues, but he can hardly just ignore the possibility of people trapped, so he carefully begins phasing through the wreckage, searching and listening for signs of anyone. Already, people are starting to gather outside; both those who were nearby and those who have managed to escape on their own, and Danny is careful to maintain his invisibility as he works.

Danny’s made it through about half the wreck by the time he spots the firetrucks arriving, he’s pretty certain that nobody’s trapped under any of the cars, and he’s also thinking more clearly. The fire has also gotten worse now, and Danny watches as fully equipped firefighters spill out onto the street, already jumping to work as the fire chief shouts out orders. Some rush to start battling the flames, but others head towards the crowd.

They’re getting headcounts, Danny realizes. It seems so obvious in retrospect, but of course, Danny would have to be visible to check with anyone. And now that they’re here, anything he tries to do in secret would probably just make things harder. There is, of course, an easy solution to that, but- Danny has yet to find any evidence that all the meta stuff is anything but propaganda.

Even as Danny considers the dilemma, he knows what he’s going to do. He’s survived danger before, after all, and if he can keep people from assuming ghost, then he’ll have an advantage on them even if it comes to the worst. Besides, there’s that whole great powers-great responsibility thing, so in a way, it’s kind of his responsibility...

Danny floats out of the wreckage before shifting into visibility, figuring it’s probably polite to approach in their field of sight.

“What can I do to help?” Danny asks, causing most of the crowd to stare in shock. Belatedly he realizes he’s still floating, but actually that’s probably a good thing. Makes it clear he’s a meta right off the bat, at least

“New hero, huh? Powerset?” The man responds promptly, leveling Danny with an even gaze. Probably the lack of shock is a good thing. Probably.

“Uh, flight obviously, enhanced strength as well, and um... The ability to turn people and things intangible?” Danny responds promptly. It’s far from his full set, but he figures those are the most relevant, and keeping most of his tricks under his sleeve makes him feel better about what he’s doing.

“Is the fire gonna hurt you? I’m not sending some kid in there to die of third degree burns or smoke inhalation.” The man frowns, giving Danny the distinct feeling he’s not particularly impressed with Danny’s answer.

“Oh! Yeah, no, I’ll be fine! I like, don’t exactly need to breathe? And I’m fine in extreme heat too, so it shouldn’t be a problem...” Danny trails off and the head firefighter narrows his eyes as he tries not to flinch at the assessing look. To Danny’s right, someone shouts and when he turns to look, he sees a firefighter wave their arm and plant some kind of flag before moving on. No longer paying attention to Danny, the chief walks over and speaks to another firefighter. Danny wonders if he’s been dismissed, but before he can do anything, the chief calls out to him.

“Alright kid, you’re up, I guess,” he says, when Danny walks over. “We don’t know how injured he is, so do not move him, but if you’re strong enough to move this stuff fast and safe, that’ll be a damn good help.” He gestures to the twisted mess that Danny’s pretty sure was the edge of a building.

Danny nods, stepping forward to examine the rubble. The firefighter that spotted the man points to a couple beams.

“Those beams are protecting him from the worst of it right now, but we’ll need to move them in order to get him out, so you gotta make sure that there’s nothing that’ll fall on him him when you move them.”

“Righty-o,” Danny says, stepping forward to grab the two support beams he’d pointed too. He carefully examines the rubble collapsed around and over it. It’s sort of like a puzzle, he realizes - not quite the same as fixing his parents tech; certainly nothing here is supposed to be smashed together like that, but-

Danny blinks and refocuses. If he just moves a few things first, he thinks he can get enough cleared away and just intange the beams. He tries to be fast as he does, without forgetting the emphasis the chief had put on safety, and after a few moments, he’s ready to move the beams. He gets into a good position, and then carefully makes them intangible, ready to react if anything bad happens. When nothing does, he carefully pulls them up and away, watching as the waiting firefighters rush in and start to work on actually extracting the guy.

He watches for a bit as a backboard appears and they begin a very slow and careful process of getting the guy onto it.

“Kid,” the chief calls, pulling Danny’s attention away.The chief guides him towards one of the buildings that’s on fire. “Got two people trapped on the third floor here. The stairs are unsafe, so if you can, get yourself up there, locate them, and get them out.”

Danny nods, not waiting for further instruction. He flies up two floors, and goes straight through the wall with his intangibility. The majority of this building isn’t terribly damaged, but one side has collapsed in on itself so if that’s where the stairs were, he can understand the difficulty. The air inside is already thick with smoke, and he quickly stops breathing, belatedly remembering that he’s supposed to not get smoke inhalation. Luckily, it doesn’t take long to catch the sound of voices, and Danny follows it to a room where two people are huddled next to an open window. Right, that’s a smart way to limit the danger of the smoke.

“Rides here!” Danny announces cheerfully, dropping his intangibility. Both people startle as they spot him, but one recovers relatively quickly.

“Him first,” they say, nodding towards their companion, who definitely looks more dazed.

“Right, here we go!” Danny says, stepping forward, and scooping the person up, and wasting no time flying directly through the building, and down to the waiting paramedics. There’s no stretcher currently available, so Danny gently sets them on the ground away from the worst of the smoke, before flying back to get the other person. They’re already standing up, and waste no time in wrapping their arms around his neck as he picks them up and flies them out to the medics as well.

Danny hardly has time to set the person down, before the chief is pulling him away again. They send him in to save a couple other trapped people, but after that, it sounds like everybody is accounted for, because the chief starts focusing all their energy on putting out the fire, rather than just containing it.

Danny is surprised to find himself pulled into helping with this part too. He gets assigned to a fire attack team, and Danny trails along after the two firefighters as the enter the building and begin to fight the fire from the inside.Occasionally, one of them will point at some piece of wall or ceiling and ask him to check what’s on the other side. He goes where they say, looking for signs of the fire, and when he does spot flames, occasionally tearing stuff down so they can get to it with their fire hose. It’s honestly a fascinating process. Danny’s never been anywhere near a major fire and the fact that the firefighters actually do more damage to the building as they work echoes in Danny’s brain as a morbid refrain.

What they’re doing is clearly working though, because he can actually feel the ambient temperature going down as time goes on. He briefly wonders if he should be trying to use his ice powers when one of his teammates complains about how hot it is, but they have protection, and he doesn’t want to risk any more info on him getting out. And anyways, he’s busy enough just doing his job. By the time they leave the building, Danny is exhausted. The interrupted night’s sleep is making itself known, alongside the surprising realization that Danny has actually worked harder tonight than he ever has before.

He lets himself half-collapse against a wall beside one of the fire trucks, once they finish their work putting out the fire. Beside him, his teammates are divesting themselves of their gear. it’s funny, Danny was anxious about revealing himself at first, but this whole night - and Danny belatedly realizes the sun is beginning to drift above the horizon now - he’s not been scared at all. Sure he’s been worried; with people in danger he’s hardly going to feel good, but in the last few hours he’s both worked harder than he has in any of his fights, and he’s done it without feeling terrible. Now, with just everyone accounted for and just about all of them either fine or in the hands of doctors, he feels odd.

It’s not a bad feeling or anything, kind of like when he successfully beats a hard level in a video game, or how he used to feel when he finished science projects in middle school.

Satisfaction, he realizes. And that’s what it is, though it’s far stronger than any version of it that he’s ever felt before. He does have a lot to feel proud of too. He helped, even though he wasn’t sure it was safe to, and he might’ve actually saved somebody’s life tonight.

“You did good, kid.” One of his teammates says, echoing Danny’s thoughts. He startles a bit, feels himself flushing, and then in his embarrassment, he feels himself tumble over into a full blush. It’s always felt more embarrassing blushing in his ghost form. The way his skin actually glows with the green tinge is just so obviously inhuman, and he tries to avoid, tries his best to seem normal and alive, even when he’s a ghost.

Of course, these people don’t know he’s a ghost, but from what he’s seen, most of the heroes out there at least look functionally human, and he waits for the firefighters around him to freak out at the reminder that he isn’t even remotely one of them.

“Damn,” one whistles. Green glow is a new one. Makes your freckles real cute though.” The others laugh, and the other of his teammates steps forward to pat him gently on the back.

“Stop embarrassing my new favorite hero,” the chief says, walking up to join them. “You gotta name?”

“Oh, yeah!” Danny answers, desperate for a distraction from this line of conversation. “I’m Danny!”

“Danny,” the chief responds flatly. he almost sounds exasperated, though Danny can’t imagine why, unless...

Unless that absolutely sounds like he just introduced himself normal and they think he’s a hero and he sounds like a dumbass without a secret identity, which- technically isn’t exactly wrong.

“Yup!” Danny says, trying to make it sound intentional. “Danny Phantom at your service! Y’know cause of the intangibility and like. It just sounded good?” There. That sounds plausible. If he actually does end up having to be a hero, though, he’ll probably need a different first name. If he gets a civilian identity, that is.

“Well, Phantom,” the chief grins, that same assessing look from before back, but noticeably more relaxed now that there’s no immediate danger. “We’re damn grateful for all your help, and if you need anything you come let us know, alright?”

“Yeah, one of his teammates echoes. “You’re an honorary firefighter now, you should come hang out at the station sometime!” A couple of the others echo the sentiment. It’s surprisingly kind, and Danny smiles at the unrelenting wave of welcome.

“I’ll think about it,” he offers uncertainly. “For now, I think I ought to go back to sleep for a few more hours.”

“That sounds like a good idea, Danny,” the chief says. “Just make sure to get something to eat first. You’ve burned a lot of calories today.”

“Yeah, will do,” Danny offers an awkward salute to the man, and then, before he can actually fall asleep standing up, he takes off to hunt down a good spot for a nap.

#dp x dc#woooh! i am actually so fucking proud of this chapter like ahhhhh#of what ive posted so far its probably gone through the most rounds of edits which is pretty typical for my more action-oriented scenes#but also its because it ended up crystallizing a lot of the central themes in this fic for me#from here stuff is gonna get really good i think#train derailment#building fire#death mention tw#feels kind of silly adding that last one to a dp fic but i wanna be careful abt it

339 notes

·

View notes

Text

I love the Naruto and Sasuke reunion.

When a character is suffering from some illness and an author wants to foreshadow this, one way to do so is by having the character exhibit symptoms of said illness.

Sasuke's personal affliction is the weakness that he associates with having personal bonds and connections to other people. This is something that he's forced to reconcile with during VOTE 2 with Naruto, who was Sasuke's weakness and the only person that Sasuke felt he needed to cut off to be lonely. But before we get to that moment there are hints along the way.

Now, during the reunion we hear Sasuke discuss this personal view of what bonds are. There's the repetition of the "bonds = weakness" motif that crops up throughout the manga, but more specifically, Sasuke states that the confusion that arises from having too many bonds is what causes that weakness.

This is where the first seeds of sns foreshadowing were planted post timeskip... I looked through 9 different sources (in order of what popped up on the search engine I used so there was no bias or selective filtering) to get a general consensus, and one of the primary symptoms of confusion/delirium is distractability. Distractability is characterized by diminished alertness, an uncertainty of what's going on around you and your surroundings.

And during the SNS reunion, we get just that. Right after Kurama ominously warns Sasuke that he'll regret killing Naruto, Sasuke, who Kishimoto made a show of pointing out surpassed Team 7 in strength, pointedly stares at Naruto to the point that he ignores Yamato. Furthermore, Sasuke's lack of external awareness is highlighted in the manga when Sasuke is narratively punished for this oversight with Captain Yamato's retaliation. The significance of this scene lies not in the fact that Sasuke stared at Naruto, but in the fact that Sasuke lost control when staring at Naruto. Kishimoto wanted this moment to be taken note of. It's a manifestation of Naruto being a "temptation" and cause of confusion.

And the scene itself imparts a romantic connotation that's validated by the text.

Previously, briefly discussed my view of romance which I'll elaborate a bit on in this post, using the reunion scene as an example.

So, from a scientific perspective, romantic love has been shown to be correlated with reduced cognitive control. In other words, romantic love is linked with distractability and a decreased ability to focus on tasks.

One of the ways this scientific *fact* has manifested itself in a shared socioculturally produced convention is through the Distracted by the Sexy Trope [an aside: I dislike the name of this trope], which is characterized by a character losing control (whether it's walking into walls or dropping whatever it is they're holding) at the sight of someone they find attractive.

And furthermore, this is a romantic trope that is promoted by the Naruto text. During the battle with Kaguya, we see the Distracted by the Sexy Trope manifest when Naruto uses the reverse harem jutsu on Kaguya, and she gets distracted to the point that she's temporarily caught off guard by the enemy. Now doesn't that sound familiar?

In both cases (Reunion and War Arc) there's significant narrative focus on the act of staring, and that act of staring has a tangible impact on the plot.

So not only does the Naruto and Sasuke reunion function as a way to show Sasuke still hadn't managed to cut his ties with Naruto, it's framed in an incredibly romantic way with a common romcom trope

... and this is just Sasuke's side. I haven't even discussed the way Naruto reacted towards Sasuke in this scene. But anyway, I think their reunion is neat. I also love the narrative progression from Kurama warning Sasuke that he'd regret killing Naruto directly to Naruto causing Sasuke to lose his external awareness. Kishimoto couldn't have been more clear about his intentions and the fact that the latter scene was an extension of the former and that both were connected.

192 notes

·

View notes

Text

too many thoughts on the new hbomberguy video not to put them anywhere so:

with every app trying to turn into the clock app these days by feeding you endless short form content, *how many* pieces of misinformation does the average person consume day to day?? thinking a lot about how tons of people on social media go largely unquestioned about the information they provide just because they speak confidently into the camera. if you're scrolling through hundreds of pieces of content a day, how many are you realistically going to have the time and will to check? i think there's an unfortunate subconscious bias in liberal and leftist spaces that misinformation is something that is done only by the right, but it's a bipartisan issue babey. everybody's got their own agendas, even if they're on "your side". *insert you are not immune to propaganda garfield meme*

and speaking of fact checking, can't help but think about how much the current state of search engines Sucks So Bad right now. not that this excuses ANY of the misinformation at all, but i think it provides further context as to why these things become so prevalent in creators who become quick-turnaround-content-farms and cut corners when it comes to researching. when i was in high school and learning how to research and cite sources, google was a whole different landscape that was relatively easy to navigate. nowadays a search might give you an ad, a fake news article, somebody's random blog, a quora question, and another ad before actually giving you a relevant verifiable source. i was googling a question about 1920s technology the other day (for a fanfiction im writing lmao) and the VERY FIRST RESULT google gave me was some random fifth grader's school assignment on the topic???? like?????? WHAT????? it just makes it even harder for people to fact-check misinformation too.

going off the point of cutting corners when it comes to creating content, i can't help but think about capitalism's looming influence over all of this too. again, not as an excuse at all but just as further environmental context (because i really believe the takeaway shouldn't be "wow look how bad this one individual guy is" but rather "wow this is one specific example of a much larger systemic issue that is more pervasive than we realize"). a natural consequence of the inhumanity of capitalism is that people feel as if they have to step on or over eachother to get to 'the top'. if everybody is on this individualistic american dream race to success, everyone else around you just looks like collateral. of course then you're going to take shortcuts, and you're going to swindle labor and intellectual property from others, because your primary motivation is accruing capital (financial or social) over ethics or actual labor.

i've been thinking about this in relation to AI as well, and the notion that some people want to Be Artists without Doing Art. they want to Have Done Art but not labor through the process. to present something shiny to the world and benefit off of it. they don't want to go through the actual process of creating, they just want a product. Easy money. Winning the game of capitalism.

i can't even fully fault this mentality- as someone who has been struggling making barely minimum wage from art in one of the most expensive cities in america for the past two years, i can't say that i haven't been tempted on really difficult occasions to act in ways that would be morally bad but would give me a reprieve from the constant stress cycle of "how am i going to pay for my own survival for another month". the difference is i don't give in to those impulses.

tl;dr i hope that people realize that instead of this just being a time to dogpile on one guy (or a few people), that it's actually about a larger systemic problem, and the perfect breeding grounds society has created for this kind of behavior to largely go unchecked!!!

#hbomberguy#james somerton#idk if any of this is coherent it just needed to get out of me#misinformation#capitalism is hell!

236 notes

·

View notes

Text

Rant about generative AI in education and in general under the cut because I'm worried and frustrated and I needed to write it out in a small essay:

So, context: I am a teacher in Belgium, Flanders. I am now teaching English (as a second language), but have also taught history and Dutch (as a native language). All in secondary education, ages 12-16.

More and more I see educational experts endorse ai being used in education and of course the most used tools are the free, generative ones. Today, one of the colleagues responsible for the IT of my school went to an educational lecture where they once again vouched for the use of ai.

Now their keyword is that it should always be used in a responsible manner, but the issue is... can it be?

1. Environmentally speaking, ai has been a nightmare. Not only does it have an alarming impact on emission levels, but also on the toxic waste that's left behind. Not to mention the scarcity of GPUs caused by the surge of ai in the past few years. Even sources that would vouch for ai have raised concerns about the impact it has on our collective health. sources: here, here and here

2. Then there's the issue with what the tools are trained on and this in multiple ways:

Many of the free tools that the public uses is trained on content available across the internet. However, it is at this point common knowledge (I'd hope) that most creators of the original content (writers, artists, other creative content creators, researchers, etc.) were never asked for permission and so it has all been stolen. Many social media platforms will often allow ai training on them without explicitly telling the user-base or will push it as the default setting and make it difficult for their user-base to opt out. Deviantart, for example, lost much of its reputation when it implemented such a policy. It had to backtrack in 2022 afterwards because of the overwhelming backlash. The problem is then that since the content has been ripped from their context and no longer made by a human, many governments therefore can no longer see it as copyrighted. Which, yes, luckily also means that ai users are legally often not allowed to pass off ai as 'their own creation'. Sources: here, here

Then there's the working of generative ai in general. As said before, it simply rips words or image parts from their original, nuanced context and then mesh it together without the user being able to accurately trace back where the info is coming from. A tool like ChatGPT is not a search engine, yet many people use it that way without realising it is not the same thing at all. More on the working of generative ai in detail. Because of how it works, it means there is always a chance for things to be biased and/or inaccurate. If a tool has been trained on social media sources (which ChatGPT for example is) then its responses can easily be skewed to the demographic it's been observing. Bias is an issue is most sources when doing research, but if you have the original source you also have the context of the source. Ai makes it that the original context is no longer clear to the user and so bias can be overlooked and go unnoticed much easier. Source: here

3. Something my colleague mentioned they said in the lecture is that ai tools can be used to help the learning of the students.

Let me start off by saying that I can understand why there is an appeal to ai when you do not know much about the issues I have already mentioned. I am very aware it is probably too late to fully stop the wave of ai tools being published.

There are certain uses to types of ai that can indeed help with accessibility. Such as text-to-voice or the other way around for people with disabilities (let's hope the voice was ethically begotten).

But many of the other uses mentioned in the lecture I have concerns with. They are to do with recognising learning, studying and wellbeing patterns of students. Not only do I not think it is really possible to data-fy the complexity of each and every single student you would have as they are still actively developing as a young person, this also poses privacy risks in case the data is ever compromised. Not to mention that ai is often still faulty and, as it is not a person, will often still make mistakes when faced with how unpredictable a human brain can be. We do not all follow predictable patterns.

The lecture stated that ai tools could help with neurodivergency 'issues'. Obviously I do not speak for others and this next part is purely personal opinion, but I do think it important to nuance this: as someone with auDHD, no ai-tool has been able to help me with my executive dysfunction in the long-term. At first, there is the novelty of the app or tool and I am very motivated. They are often in the form of over-elaborate to-do lists with scheduled alarms. And then the issue arises: the ai tries to train itself on my presented routine... except I don't have one. There is no routine to train itself on, because that is my very problem I am struggling with. Very quickly it always becomes clear that the ai doesn't understand this the way a human mind would. A professionally trained in psychology/therapy human mind. And all I was ever left with was the feeling of even more frustration.

In my opinion, what would help way more than any ai tool would be the funding of mental health care and making it that going to a therapist or psychiatrist or coach is covered by health care the way I only have to pay 5 euros to my doctor while my health care provider pays the rest. (In Belgium) This would make mental health care much more accessible and would have a greater impact than faulty ai tools.

4. It was also said that ai could help students with creative assignments and preparing for spoken interactions both in their native language as well as in the learning of a new one.

I wholeheartedly disagree. Creativity in its essence is about the person creating something from their own mind and putting the effort in to translate those ideas into their medium of choice. Stick figures on lined course paper are more creative than letting a tool like Midjourney generate an image based on stolen content. How are we teaching students to be creative when we allow them to not put a thought in what they want to say and let an ai do it for them?

And since many of these tools are also faulty and biased in their content, how could they accurately replace conversations with real people? Ai cannot fully understand the complexities of language and all the nuances of the contexts around it. Body language, word choice, tone, volume, regional differences, etc.

And as a language teacher, I can truly say there is nothing more frustrating than wanting to assess the writing level of my students, giving them a writing assignment where they need to express their opinion and write it in two tiny paragraphs... and getting an ai response back. Before anyone comes to me saying that my students may simply be very good at English. Indeed, but my current students are not. They are precious, but their English skills are very flawed. It is very easy to see when they wrote it or ChatGPT. It is not only frustrating to not being able to trust part of your students' honesty and knowing they learned nothing from the assignment cause you can't give any feedback; it is almost offensive that they think I wouldn't notice it.

5. Apparently, it was mentioned in the lecture that in schools where ai is banned currently, students are fearful that their jobs would be taken away by ai and that in schools where ai was allowed that students had much more positive interactions with technology.

First off, I was not able to see the source and data that this statement was based on. However, I personally cannot shake the feeling there's a data bias in there. Of course students will feel more positively towards ai if they're not told about all the concerns around it.

Secondly, the fact that in the lecture it was (reportedly) framed that being scared your job would disappear because of ai, was untrue is... infuriating. Because it already is becoming a reality. Let's not forget what partially caused the SAG-AFTRA strike in 2023. Corporations see an easy (read: cheap) way to get marketable content by using ai at the cost of the creative professionals. Unregulated ai use by businesses causing the loss of jobs for real-life humans, is very much a threat. Dismissing this is basically lying to young students.

6. My conclusion:

I am frustrated. It's clamoured that we, as teachers, should educate more about ai and it's responsible use. However, at the same time the many concerns and issues around most of the accessible ai tools are swept under the rug and not actively talked about.

I find the constant surging rise of generative ai everywhere very concerning and I can only hope that more people will start seeing it too.

Thank you for reading.

39 notes

·

View notes

Text

Kind of weird that amidst Hawkules' allegations against Sparck and the growing movement stemming from it that Kingsisle puts out a clearly rushed promotion for Hawkules, the companion, that would likely shift search engine results closer to any in-game mentions of Hawkules again.

Aw no you're right, that's a little crazy, it's all got to be coincidence that this is perfectly timed to distance the company from the allegations and any resulting pressure.

Whatever did happen with Hawkules, I don't really appreciate that Kingsisle's response seems to be dodging the situation as much as possible. I know it's just typical corporate behavior, as some people end up quick to point out, but it's also alright for me to be uncomfortable with this small team I grew up idolizing becoming more and more corporate.

To finish this off, I'm not going to be making any big claims about the Hawkules situation myself. Do I believe Hawkules? Absolutely. I feel for both Hawkules and Kingsisle itself as well given his point regarding Sparck having manipulated the people at KI. However, that is wherein the problem lies. Regarding this situation I am clouded by personal bias and emotion. I dislike Sparck (the employee), and I think many people could share that sentiment. He feels incompetent, cold, and a bit creepy, and Hawkules' tweet played directly into that. Saying all of that makes me wonder why he's in a position focused on the community even without these allegations, but I digress. I'm biased, emotional, and lack the evidence to, in good conscience, end this with something that says someone is right or wrong.

What I will say though is go learn about the situation yourself. Keep the fire burning until we have results. If Hawkules lied, we deserve to know. If Sparck is as rough as Hawkules claimed him to be, we deserve a better leader for our community. Whatever happens, for the love of Bartleby do not let this all get swept under the rug.

#pirate101#p101#kingsisle#hawkules#sparck#lacking whimsy sorry#w101#wizard101#pitty101#wizzy101#also sorry i didn't link to anything#idk how that goes over on tumblr so

12 notes

·

View notes

Note

https://www.tumblr.com/ambersock/744038915057549312

Hi! I just saw your tags on this post, would you mind elaborating on this bias?

I’ve heard about the Robert Singer bias and some of the Bucklemming bias through tumblr but I am not familiar with the their dvd commentary (at least for Bucklemming, I’ve heard some of Singer’s).

Thank you in advance!

ask from @supamerchant (@whorewhouse had a similar ask)

The Buckleming Agenda has been cited numerous times, including coming from them specifically. Tumblr being what it is, it's difficult to dig up the specific receipts from the search engine, but esentially they have admitted to being fascinated (read: obsessed) with Mark Pellegrino and went out of their way to try to bring him in as much as possible. They initially tried to shoehorn in a redemption arc for Lucifer, even adding some comedic "buddy" moments between Lucifer and Sam, and thankfully Jared put his foot down and squashed that crap. Reportedly they had written a script including those comedic moments and Jared flat out refused to even do a table read.

As for Singer, it's more about what he doesn't say in the commentaries. He will go on and on about Jensen's very minor acting choices, yet he never once has a kind word to say about Jared. The best example is the 4x21 commentary, where he gushes about Jensen turning his head slightly at the top of the stairs at the beginning of the episode (as if no other actor on Earth ever thought to use a head gesture so Jesen must be a gENiUs). Then during Jared's scenes in the panic room, there's not one word from Singer about Jared's objectively amazing performance. Instead, Singer bitches about how tough it was to shoot in a small space and talks about the cost of the crane shot. During the scene between Sam and "Mary", it's Sera who talks about how incredible Jared is at expressing that internal torment. They also talk about the scene where Bobby has the gun pointed at Sam, and Sam moves the gun up to his heart and says, "then shoot". Singer compliments the choice thinking it was Sera's idea, and Sera has to correct him and gives the credit to Jared.

46 notes

·

View notes

Text

🔘 Wednesday - ISRAEL REALTIME - Connecting to Israel in Realtime

▪️HUMAN RIGHTS WATCH REPORT - HAMAS COMMITTED A SYSTEMATIC ASSAULT AGAINST CIVILIANS.. The report condemned what the rights organization said were various war crimes and crimes against humanity, including “deliberate and indiscriminate attacks against civilians,” the use of civilians as human shields, and cruel and inhumane treatment, finding Hamas complicit with Oct. 7 war crimes. https://www.hrw.org/news/2024/07/17/october-7-crimes-against-humanity-war-crimes-hamas-led-groups

.. HAMAS - NO WE DIDN’T.. “We reject the lies contained in the Human Rights Watch report, the blatant bias for Israel and the lack of professionalism and credibility, and demand they withdraw it and apologize for it.“

▪️HEZBOLLAH THREATENS.. “The first step will be the launch of about 10,000 thousand missiles to military targets as far as south Israel. The second stage the Air Force is disabled. The third stage is a ground invasion towards settlements near the fence, killing and taking hostages.”

▪️HEZBOLLAH LEADER SAYS.. “fighting is a custom and honor (for us) martyrdom (is) from god."

▪️SMUGGLING TUNNELS.. The IDF believes it will take many more months to complete the search for Hamas's cross-border smuggling tunnels along the Gaza-Egypt border. So far, around 25 tunnels have been located. Combat engineers are currently meticulously sweeping the entire Gaza-Egypt border area in Rafah, while expanding the Philadelphi border corridor by demolishing structures within about 800 meters of the border.

▪️SICK CRIME & RUMORS.. a mother killed her young son yesterday in some kind of mental break. Rumors immediately swirled that the mother was a survivor of the Oct. 7 massacre. Bituach Leumi: this is untrue.

▪️SOCIETAL CONFLICT.. Last night a bus of soldiers returning from Gaza was, weirdly, diverted through Meah Shearim, the most ultra of ultra-orthodox neighborhoods in Israel. On seeing a bus of soldiers, the locals began to harass and pelt the bus - perhaps assuming they are coming to haul them away to the army.

▪️PROTEST - ANTI-ATTORNEY GENERAL.. Activists of the "If You Want" movement came this morning to demonstrate in front of the home of the Legal Adviser to the Government, Gali Beharev-Mara, in protest of the normalization of violations of public order.

▪️WHY NOT MORE ULTRA-ORTHODOX CONSCRIPTIONS? Foreign Affairs and Defense Committee Knesset members ask why the IDF doesn’t issue conscription orders to all yeshivas students of age? The IDF replies: "The conscription bureau doesn't know how to take in more." (( The IDF for years avoiding creating programs for this segment of society. ))

▪️NO CYBERTRUCKS FOR ISRAEL? The Ministry of Transport forbids Tesla's Cybertruck, to be tested or driven on Israeli roads. The amazing reason - the vehicle is (lightly) bulletproof. In Israel, a special permit is required to import a bulletproof vehicle, and the Cybertruck did not receive such a permit.

⭕ OVER 80 ROCKETS FIRED BY HEZBOLLAH towards Mt. Meron and surrounding areas last night, another 15+ FIRED AT NAHARIYA area.

♦️COUNTER-TERROR OPS - JENIN.. Arab channels show apparent special forces operating in Jenin with firefights.

♦️COUNTER-TERROR OPS - KALKILYA.. Firefight.

♦️SIGNIFICANT TARGETED AIRSTRIKES in CENTRAL GAZA overnight.

19 notes

·

View notes

Text

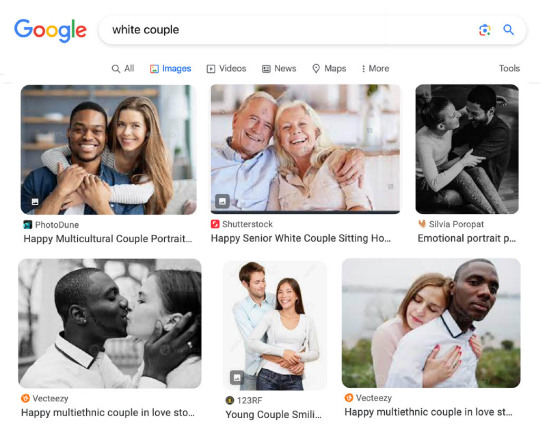

Google’s push to lecture us on diversity goes beyond AI

by Douglas Murray

The future is here. And it turns out to be very, very racist.

As the New York Post reported yesterday, Google’s Gemini GI image generator aims to have a lot of things. But historical accuracy is not among them.

If you ask the program to give you an image of the Founding Fathers of this country, the AI will return you images of black and Native American men signing what appears to be a version of the American Constitution.

At least that’s more accurate than the images of popes thrown up. A request for an image of one of the holy fathers gives up images of — among others — a Southeast Asian woman. Who knew?

Some people are surprised by this. I’m not.

Several years ago, I went to Silicon Valley to try to figure out what the hell was going on with Google Images, among other enterprises.

Because Google images were already throwing up a very specific type of bias.

If you typed in “gay couples” and asked for an image search, you got lots of happy gay couples. Ask for “straight couples” and you get images of, er, gay couples.

It was the same if you wanted to see happy couples of any orientation.

Ask for images of “black couples” and you got lots of happy black couples. Ask for “white couples” and you got black couples, or interracial couples. Many of them gay.

I asked people in Silicon Valley what the hell was going on and was told this was what they call “machine learning fairness.”

The idea is that we human beings are full of implicit bias and that as a result, we need the machines to throw up unbiased images.

Except that the machines were clearly very biased indeed. Much more so than your average human.

What became clear to me was that this was not the machines working on their own. The machines had been skewed by human interference.

If you ask for images of gay couples, you get lots of happy gay couples. Ask for straight couples and the first things that come up are a piece asking whether straight couples should really identify as such. The second picture is captioned, “Queer lessons for straight couples.”

Shortly after, you get an elderly gay couple with the tag, “Advice for straight couples from a long-term gay couple.” Then a photo with the caption, “Gay couples have less strained marriages than straight couples.”

Again, none of this comes up if you search for “gay couples.” Then you get what you ask for. You are not bombarded with photos and articles telling you how superior straight couples are to gay couples.

It’s almost as though Google Images is trying to force-feed us something.

It is the same with race.

Ask Google Images to show you photos of black couples and you’ll get exactly what you ask for. Happy black couples. All heterosexual, as it happens.

But ask the same engine to show you images of white couples and two things happen.

You get a mass of images of black couples and mixed-race couples and then — who’d have guessed — mixed-race gay couples.

Why does this matter?

Firstly, because it is clear that the machines are not lacking in bias. They are positively filled with it.

It seems the tech wants to teach us all a lesson. It assumes that we are all homophobic white bigots who need re-educating. What an insult.

Secondly, it gives us a totally false image — literally — of our present. Now, thanks to the addition of Google Gemini, we can also be fed a totally false image of our past.

Yet the interesting thing about the past is that it isn’t the present. When we learn about the past, we learn that things were different from now. We see how things actually were and that is very often how we learn from it.

How were things then? How are they now? And how do they compare?

Faking the past or altering it completely robs us of the opportunity not just to understand the past but to understand the present.

Google has said it is going to call a halt on Gemini. Mainly because there has been backlash over the hilarious “diversity” of Nazi soldiers that it has thrown up.

If you search for Nazi officers, it turns out that there were black Nazis in the Third Reich. Who knew?

While Google Gemini gets over that little hurdle, perhaps it could realize that it’s not just the Gemini program that’s rotten but the whole darn thing.

Google is trying to change everything about the American and Western past.

I suggest we don’t let it.

There was an old joke told in the Soviet Union that now seems worryingly relevant to America in the age of AI: “The only thing that’s certain is the future. The past keeps on changing.”

40 notes

·

View notes

Text

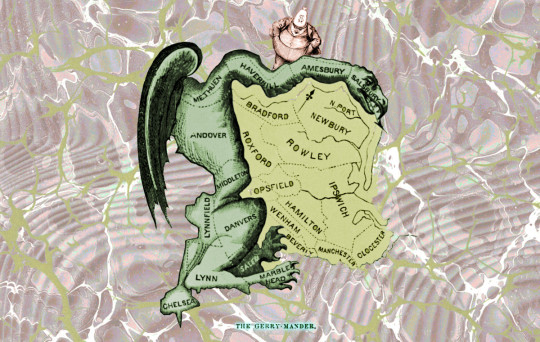

Monopoly is capitalism's gerrymander

For the rest of May, my bestselling solarpunk utopian novel THE LOST CAUSE (2023) is available as a $2.99, DRM-free ebook!

You don't have to accept the arguments of capitalism's defenders to take those arguments seriously. When Adam Smith railed against rentiers and elevated the profit motive to a means of converting the intrinsic selfishness of the wealthy into an engine of production, he had a point:

https://pluralistic.net/2023/09/28/cloudalists/#cloud-capital

Smith – like Marx and Engels in Chapter One of The Communist Manifesto – saw competition as a catalyst that could convert selfishness to the public good: a rich person who craves more riches still will treat their customers, suppliers and workers well, not out of the goodness of their heart, but out of fear of their defection to a rival:

https://pluralistic.net/2024/04/19/make-them-afraid/#fear-is-their-mind-killer

This starting point is imperfect, but it's not wrong. The pre-enshittified internet was run by the same people who later came to enshittify it. They didn't have a change of heart that caused them to wreck the thing they'd worked so hard to build: rather, as they became isolated from the consequences of their enshittificatory impulses, it was easier to yield to them.

Once Google captured its market, its regulators and its workforce, it no longer had to worry about being a good search-engine – it could sacrifice quality for profits, without consequence:

https://pluralistic.net/2024/04/24/naming-names/#prabhakar-raghavan

It could focus on shifting value from its suppliers, its customers and its users to its shareholders:

https://pluralistic.net/2024/05/15/they-trust-me-dumb-fucks/#ai-search

The thing is, all of this is well understood and predicted by traditional capitalist orthodoxy. It was only after a gnostic cult of conspiratorialists hijacked the practice of antitrust law that capitalists started to view monopolies as compatible with capitalism:

https://pluralistic.net/2022/02/20/we-should-not-endure-a-king/

The argument goes like this: companies that attain monopolies might be cheating, but because markets are actually pretty excellent arbiters of quality, it's far more likely that if we discover that everyone is buying the same product from the same store, that this is the best store, selling the best products. How perverse would it be to shut down the very best stores and halt the sale of the very best products merely to satisfy some doctrinal reflex against big business!